|

|

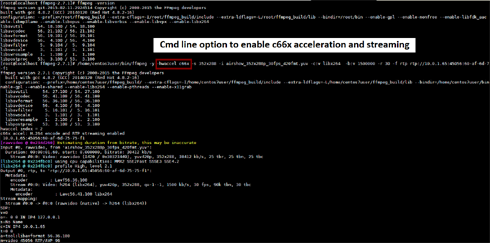

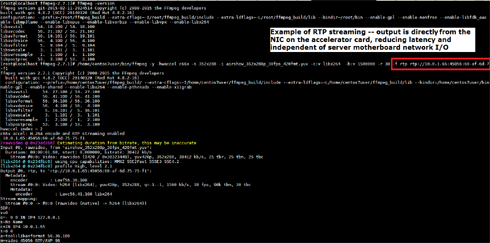

| FFmpeg screen captures with c66x acceleration enabled |

|

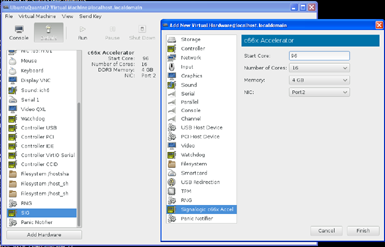

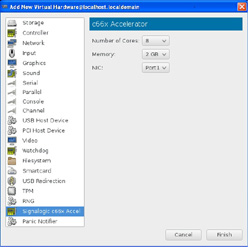

| Virtual ffmpeg hardware with c66x accelerator (VMM dialog / VM configuration screen cap) |

|

|

Side-by-side desktop capture and tablet player, with c66x accelerator low-latency RTP streaming

Click here to see a YouTube video of VDI streaming |

FFmpeg Accelerator -- FFmpeg Hardware Acceleration

- Overview

- Product Information

- Why Use Hardware Acceleration

- FFmpeg Command Line Examples

- FFmpeg in VMs

- HPC Software Model

- CPU vs. GPU Overview

- How To Purchase

- Related Items

- Tech Support

Overview

-

Maximize ffmpeg performance with software + hardware solution

Compatible with Linux servers, all form-factors

Use ffmpeg standard command line

Multiple streams with endpoint combinations of file, RTP, and MPEG-TS supported

H.264 standard settings and some presets supported

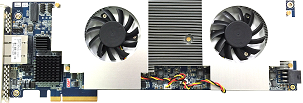

Latency control supported; accelerator cards have their own dedicated NIC to reduce latency

Add up to 512 CPU cores in a standard server to dramatically increase ffmpeg concurrency

Run multiple, concurrent ffmpeg instances in multiple VMs, without decreased performance

Product Information

Information for software and 32-core and 64-core accelerators below includes specifications, documentation links, and technical support.

|

|

| Signalogic Part # | DHSIG-CIM32-PCIe / DHSIG-CIM64-PCIe |

| System Manufacturer |

Signalogic |

| Description | FFmpeg Accelerator |

| Product Category | HPC (High Performance Computing) |

| Product Status | Preliminary |

Default tab content (this text) shown when (i) no tabs are automatically selected, or (ii) if rel value for the tab ="#default".

Availability

Stock: 0

On Order: 0

Factory Lead-Time: 8 weeks

Stock: 0

On Order: 0

Factory Lead-Time: 8 weeks

Pricing (USD)

Qty 1: 4500 MOQ: 1 †

Qty 1: 4500 MOQ: 1 †

Promotions

Current Promotions: None

Current Promotions: None

Why Use Hardware Acceleration

Various vendors talk about GPU, DSP, FPGA, etc. but these are all "really hard to use" solutions. These vendors miss the point, trying to prove that servers are not traditionally good at streaming and image processing. Obviously servers are plenty good at running ffmpeg and image processing. What is fair to say is that trends in server architecture, including virtualization, DPDK, and software-defined networking, have increased performance expectations of individual servers. In the past HPC users were willing to stack boxes; now due to virtualization, they expect one box to be a stack of VMs -- without penalties in performance, latency, and network I/O throughput. Signalogic's software and hardware acceleration products are designed to take full advantage of server architecture trends. They augment modern servers, providing well integrated functionality and user interface, as opposed to alternative, non-mainstream methods. User interfaces include ffmpeg command line, opencv APIs, OpenMP programming, and VM configuration. Within the latter, our products add cores, DDR3 mem, and NIC resources that can be used both with/without virtualization. The result is improved server performance density, reduced latency, and increased stream concurrency, without having to use proprietary programming languages, study a 300-page chip vendor data sheet, work with low level "SDKs", or other time-consuming development efforts.FFmpeg Command Line Examples

Below are some example ffmpeg commands. Adding the option "-hwaccel c66x" enables c66x hardware acceleration. /ffmpeg -y -hwaccel c66x -s 352x288 -i ../video_files/airshow_352x288p_30fps_420fmt.yuv -c:v libx264-b 1500000 -r 30 ../video_files/test.h264 /ffmpeg -y -hwaccel c66x -s 352x288 -i ../video_files/ airshow_352x288p_30fps_420fmt.yuv

-c:v libx264 -b 1500000 -r 30 -f rtp rtp://10.0.1.65:45056:60-af-6d-75-75-f1 /ffmpeg -y -hwaccel c66x -s 352x288 -i ../video_files/airshow_352x288p_30fps_420fmt.yuv -c:v libx264 -b

1500000 -r 30 ../video_files/test.h264 -f rtp rtp://10.0.1.65:45056:60-af-6d-75-75-f1

FFmpeg in VMs

c66x accelerator resources can be assigned / allocated to VMs, allowing multiple instances of ffmpeg to run concurrently, each with their own accelerator cores, memory, streaming network I/O, independently from the server motherboard. Currently the KVM hypervisor is supported, along with QEMU, libvirt, and virt-manager. Below is an image showing installation of an Ubuntu VM, running on a CentOS host:

|

| VM configuration screen cap, showing c66x accelerator resources |

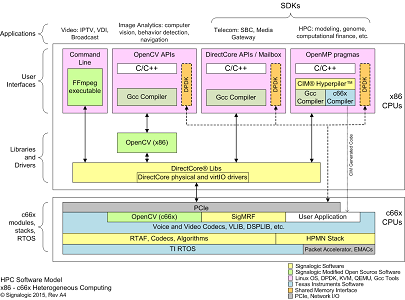

HPC Software Model

Below is a software model diagram that shows how c66x CPUs are combined with x86 CPUs inside high performance computing (HPC) servers. The user interface varies in complexity from left to right, from simple (command line) to complex (OpenMP pragmas). Pragmas are compiled by the CIM® Hyperpiler™, which separates C/C++ source into "soure code streams" and augments streams with additional, auto-generated C/C++ source code. Multiple different applications can be run at the same time, as concurrent host processes, concurrent VMs, or a combination. Adding DPDK capability to application user space is optional.

|

| Software model for combination of c66x and x86 CPUs in HPC servers |

Notes 1CIM® = Compute Intensive Multicore 2RTAF = Real-time Algorithm Framework 3HPMN = High Performance Multicore Network stack

CPU vs. GPU Overview

CPU and GPU devices are constructed from fundamentally different chip architectures. Both are very good at certain things, and both are not so good at some things -- and these strengths and weaknesses are mostly opposites, or complementary. In general:- CPUs tend to be good at complex algorithms that require random memory access, non-uniform treatment of data sets, unpredictable decision paths, and interface to peripherals (network I/O, PCIe, USB, etc).

- GPUs tend to be good at well-defined algorithms that operate uniformly on large data sets, accurate and very fast math, and graphics applications of all types

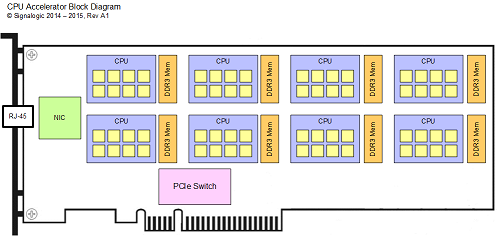

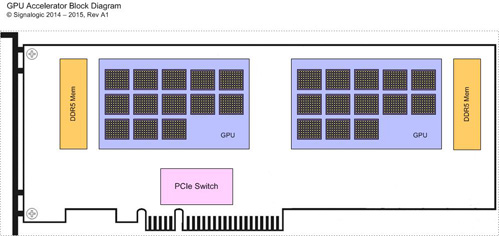

In comparing the diagrams above, some obvious differences and similarities stand out:

- GPU cores are sometimes called "CUDA cores", in reference to Nvidia's programming language. It's not easy to compare CPU and GPU cores (apples and oranges). Maybe the easiest way to think about it is (i) for any given math calculation, a GPU core can almost always do it much faster, and (ii) GPU cores do not run arbitrary C/C++, Java, or Python code, so they're not programmable in the conventional sense

- A GPU accelerator can bring far more processing force to bear on massively parallel problems. Examples include graphics, bitcoin mining, climate simulations, DNA sequencing -- any problem where the data set can be subdivided into "regions", such that the results of one region do not depend on others

- A CPU accelerator typically has its own NIC (or more than one), which can provide advantages in reduced latency and "data localization" -- bringing the compute cores closer to the data. Onboard NICs are typically not found on GPU accelerators as GPU cores are not designed to run device drivers, TCP/IP stack, etc.

- Both types of accelerators take full advantage of high performance PCIe interfaces found in modern servers, including multiple PCIe slots and risers, accessibility to DPDK cores, and excellent software support in Linux

How to PurchaseEnter the fields shown above, including:

|

Related Items

|